Getting Started With Helm

Helm simplifies Kubernetes application deployment and management by packaging manifests into charts, enabling consistent deployments across environments and facilitating rollbacks in case of errors. Harness enhances this process by integrating Helm for seamless deployment and fault tolerance, ensuring applications can automatically revert to stable versions during failures.

With Kubernetes' popularity and widespread adoption, a way to install, upgrade, maintain and define even the most complex Kubernetes applications has surfaced.

One approach is to manually deploy Kubernetes manifest files, making changes to them as required making the process lengthy and prone to errors as there's no consistency of deployments with this approach. With a fresh Kubernetes cluster, you need to define the namespace, create storage classes, and then deploy your application to the cluster. As you can see, the process is quite lengthy, and if something goes wrong, it becomes a tedious process to find the problem.

The approach is to package all the Kubernetes manifest using a package manager like Helm that allows you to define, install, and upgrade your applications using a helm chart. When you deploy using Helm, you can define all these resources in one place, making it much easier to deploy your application consistently across environments.

This article introduces you to Helm, a CNCF graduate project, and shows you how to deploy a Helm application using Harness to your Kubernetes cluster. Also, you’ll learn how to embed fault tolerance so every time there is an error, the platform rolls back the change to its previous stable version.

About Helm

Helm is a package manager for Kubernetes that allows you to easily install and manage applications on your Kubernetes cluster. You can use Helm to find, install, and upgrade software for your Kubernetes cluster that is uploaded by others. The project also helps you share your software with others with help of charts that you create.

Deis (now part of Microsoft) launched the Helm project in 2016 as a way to package and deploy applications on Kubernetes. Helm was influenced by package managers such as apt and Homebrew and the word "Helm" is derived from the Greek word for "helmsman" or "pilot."

Helm is now a project of the Cloud Native Computing Foundation (CNCF), and Helm 3 was released in 2019 as the most recent major release.

Benefits of Helm

The Kubernetes package manager brings to the table a lot of benefits which include:

1. Helm is a powerful and easy-to-use tool that helps you manage your Kubernetes applications and share them with help of Charts.

2. The project makes it easy to deploy, remove and manage applications on Kubernetes with simple commands.

3. It helps you manage your Kubernetes resources efficiently from a single pane of glass.

4. It makes it easy to roll back changes to your Kubernetes applications with help of robust versioning.

5. It is an open source project that is actively maintained by the community so troubleshooting issues become easier and you can submit patches for your requirements.

Getting started

For any project, you need to have some prerequisites in place so similarly in this post you need to have:

- Working knowledge of kubectl

- Basic Understanding of Helm Charts, Values.yaml

- Access to a cloud cluster or a local cluster

- GitHub Account to fork repository and update charts

- A Free Harness Account which you can signup here

- Gone through the Getting Started with Harness Blog

- Have a Kubernetes Cluster Running and set up the Delegate.

High Level Steps

- Setup your Account

- Create a Project

- Create a Pipeline

- Create Service

- Create Environment

- Create Execution Stage

- Run Pipeline

Project Setup

Follow the following steps to get started:

- Sign up for a free trial of Harness

- Select the TryNextGen tab for a seamless experience.

- Create a new project and select the Continuous Delivery module.

- Start creating a new pipeline, and add details by following the next steps.

Service

After you have started to create your pipeline the first thing you need to do is to create a Service.

Now set up your service and manifest according to the following and make sure you have your connectors in place.

For manifests, fork and use your clone of the following GitHub repository as your location and select manifest type as Helm Chart. Forking is important as in the lateral stages of this tutorial you need to edit your values.yaml!

Chart Structure

The repository contains a harness-helm/ folder which contains your chart. If you open the folder the structure contains the following:

1. templates/ - with all your object manifest

2. Chart.yaml - A file with details on h\Helm

3. Values.yaml - A file containing your default configuration

Now, to list your manifest details from your repository populate the following as per the diagram below:

The Values.yaml is the file which provides you with one glass pane to edit all the defaults without getting deeper into the manifests! Other Parameters that you edit are:

- Manifest Name - Add a name for you to reference your Manifest

- Git Fetch Type - Defines how your Charts will be pulled

- Branch - Defines what branch is your Chart in

- Chart Path - This is the root directory of your chart

- Helm Version - This is Helm’s Version and in this tutorial, you’re using Helm V3

- Values.yaml - Add files which will override your default values

Next specify your environment and connect to it using a delegate or Master URL method if you prefer!

Execution Stage

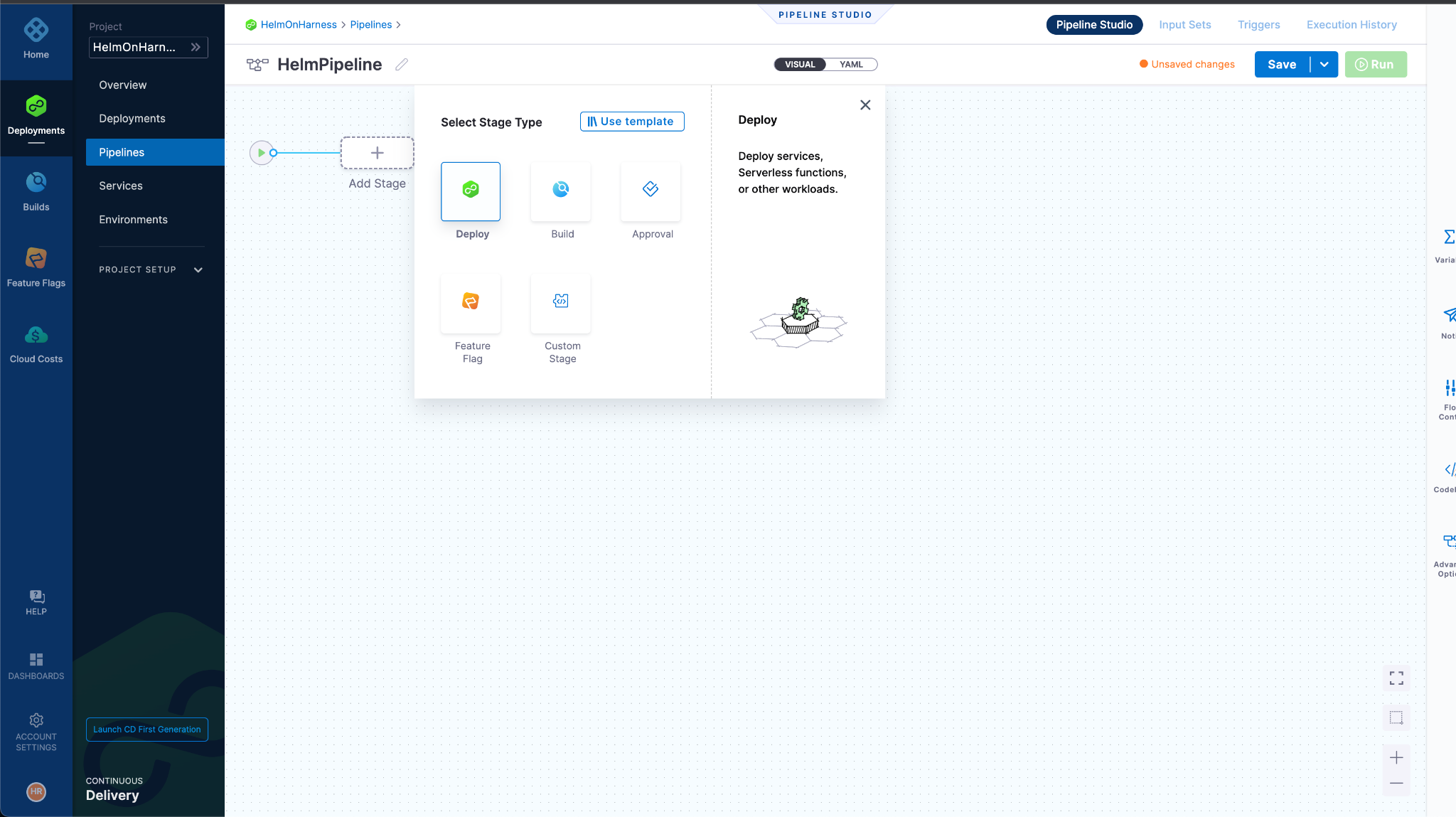

After your environment is ready and the cluster is connected add your steps. As Helm is the centre of this tutorial you’ll be using Helm deployment.

There are many other strategies like rolling deployment, canary, blue green or others which you can use according to your requirements.

Click on Add Steps and then you should see the following:

Choose Helm Deployment!

Add the parameters and apply changes, save your pipeline using the blue button on the top right!

Running Pipeline

Click on Run to start your pipeline and wait to see your artifacts being deployed on your cluster.

If you have done the steps correctly you should receive the green check marks as below:

If you’re on a public cloud cluster the repository should expose an endpoint with LoadBalancer service which you can find using the command `kubectl get svc` and access by going on your external IP:

Conclusion

I hope this post was helpful for you to understand how Helm works on Harness and how it makes your workflow simpler. Here in this series, you'll learn how Helm's fault tolerance can be easily implemented in Harness in order to protect your workflow against failures and invoke rollbacks when necessary.

Till then don’t forget to Request your personalized Harness demo, download a free trial or If you are facing some issues join our community slack channel and get your doubts answered!